Photo by Owen Beard on Unsplash

What's your ChatGPT Strategy? Updating Your Organisation’s AI Strategy in a Post-ChatGPT World

Martin Pomeroy | 19th December 2022

If you are a business leader or decision maker, you have probably heard of or tried out ChatGPT over the last few weeks. You might even have thought about how you could built this technology into your business’ medium or long term strategy in order to benefit from the useful outputs that it can produce. You may have been asked:

“What should our strategy on ChatGPT be?”

ChatGPT is an impressive tech demo that show cases the transformative capabilities of large language models (LLMs), a type of machine learning model that ‘understands’ (well kind of) human languages and can use that understanding to generate well written and compelling text outputs in response to a text input or ‘prompt’.

Whilst I agree with the general buzz around how LLMs will transform the way that we work across all sectors, including finance. I am less excited about ChatGPT or its underlying model, GPT-3, specifically. However, what’s more exciting is that OpenAI’s model has made the benefits of LLMs visible to less-technical business leaders and decision makers crystal clear. There are a number of shortcomings and risks that come hand-in-hand with with GPT-3 and similar types of model and with over-reliance on remote API-led AI products. Below I will talk about some of these shortcomings and how you might address each of them in your organisation’s AI strategy.

Data Accuracy and Provenance

Whilst ChatGPT has been referred to as a search engine killer, it’s important to know that it (like most models in the ‘LLM’ class) is frozen in time, having been trained on a snapshot of data that was collected around 6 months ago. This means that, unlike Google, Bing et al, the model cannot answer questions about recent events which may be problematic if you are interested in today’s financial reports or your ESG team want to know about the latest scandal that a portfolio company was involved in.

Even more worrying is that GPT-3 and similar types of LLMs are capable of making up plausible sounding stories in order to satisfy queries if they don’t know the answer. Whilst OpenAI have added checks to ensure that ChatGPT doesn’t answer questions that it doesn’t know the answer to, these can be easily bypassed or may fail in some cases. This type of unreliable behavior is clearly not appropriate for informing investment decisions in regulated markets.

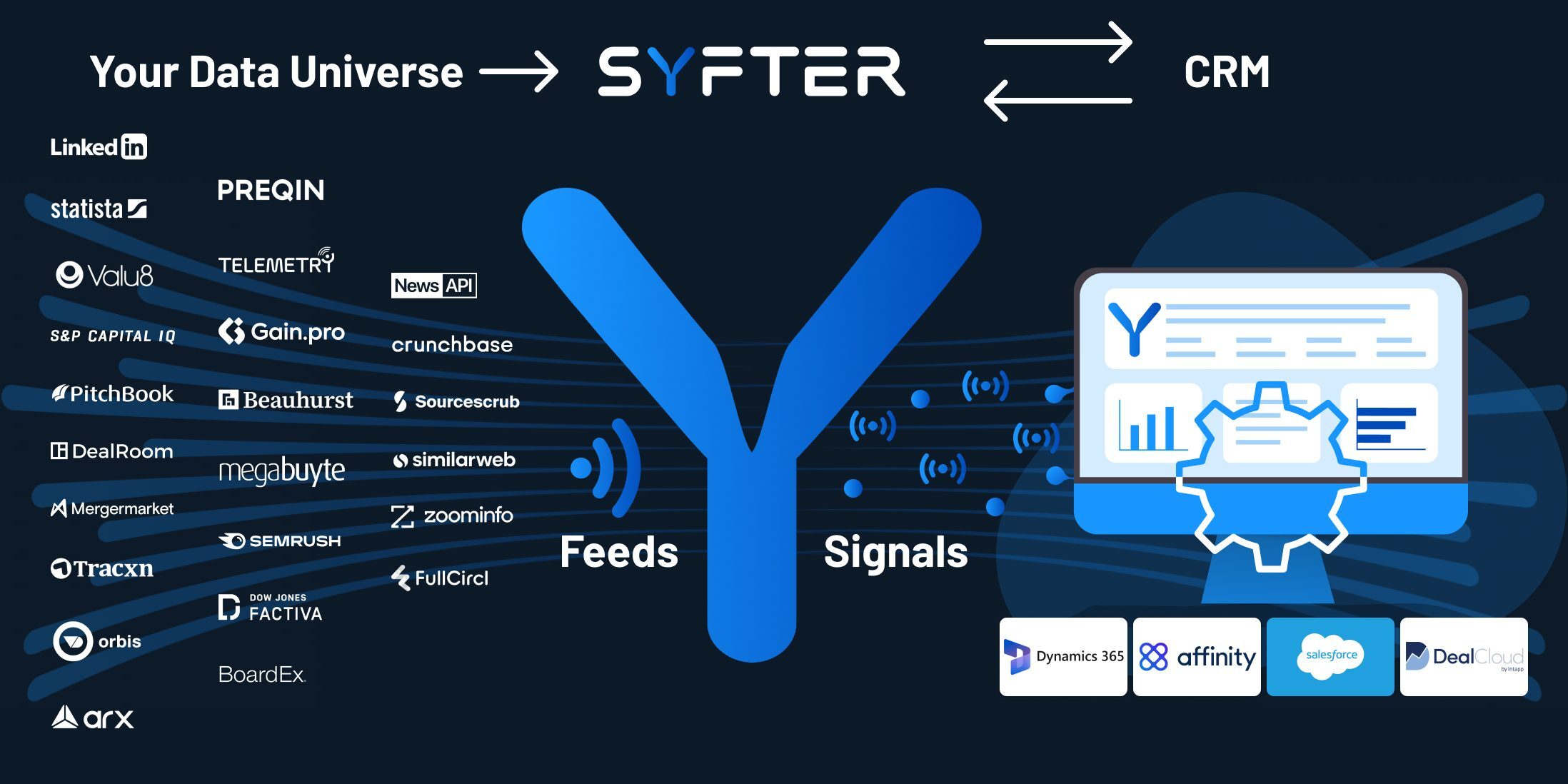

The good news is that you can still rely upon the power of modern AI models and have data provenance and auditability if you plan it into your strategy by considering a “retrieve and rank” AI approach. In this mode of operation, you can combine traditional search, like that offered by Google, with state of the art NLP to provide question answering capabilities.

A high level example of a retrieve and rank architecture. The retrieval system finds a small number of relevant documents and the AI system finds an appropriate excerpt of text that answers the question, making all of this context available to the end user.

First the query/question is used to identify a handful of documents that might be relevant from within a much larger collection. Then, an AI question answering model that uses a similar neural network architecture to an LLM/GPT (Transformer networks) can be used to find the answer to the question, also noting its exact location within the document.

This strategy provides the best of both worlds: it vastly reduces the amount of research that analysts need to do to find relevant information whilst also providing data provenance and auditability to the end user, empowering analysts to use their human expertise to determine whether or not the source is trustworthy.

Model Bias and Fine-Tuning

In the days following ChatGPT’s release, a number of examples have emerged demonstrating problematic biases around gender, race and nationality deeply baked into the model. Coupled with the data provenance issue above, it would be difficult, if not impossible, to understand the extent to which advice generated by the system might have been influenced by these biases and how this might impact downstream business decisions within your organisation. It’s not just ChatGPT that has this problem, in the industry most of the more-powerful AI models are considered “black boxes” in the sense that it’s impossible to understand exactly how they arrived at a particular decision and this makes them inappropriate for use cases where auditability is key.

This video shows a user quickly bypassing ChatGPT’s inappropriate prompt check in order to showcase the model’s baked in biases on race and nationality .

There are a number of solutions to this problem. There are mature techniques for extracting explanations of black box models and emerging techniques for probing-for and removing biases from models. However, in order to apply these techniques you usually need direct access to the black box model that you are interested in analysing and/or fixing. This is not possible when your organisation’s use of AI is done entirely via remote APIs like ChatGPT. Likewise, your organisation will have no control over how frequently OpenAI’s model is re-trained or updated and no way to let them know that it got something wrong. I have no doubt that OpenAI will continue to improve their model and work to remove its biases, however the scale of the training data and model is immense and it may take the organisation years to track down and remove biases.

Therefore, your AI strategy should almost certainly involve either developing your own models in-house or working with an AI integration partner to ensure that your models have been robustly tested for inappropriate biases and to develop any necessary explainability tools for your analysts’ workflows. This approach will also allow you (or your AI partner) to regularly re-train and update the model as needed when things do go wrong.

Intellectual Property and Commercial Controls

When you use ChatGPT (or similar, remotely managed AI services) you are sending your data to their server to be processed and, after the hype dies down, you will likely be paying OpenAI a few cents every time you do so.

Even with data provenance and bias issues notwithstanding, this might seem like a reasonable commercial trade-off. However, you may be handing over valuable information about your organisation’s business model that could be used to train the remote system in ways that benefit your competitors as much as you. Of course, it’s also pretty likely that remote AI providers will turn up the heat with price rises as organisations become more dependent upon them.

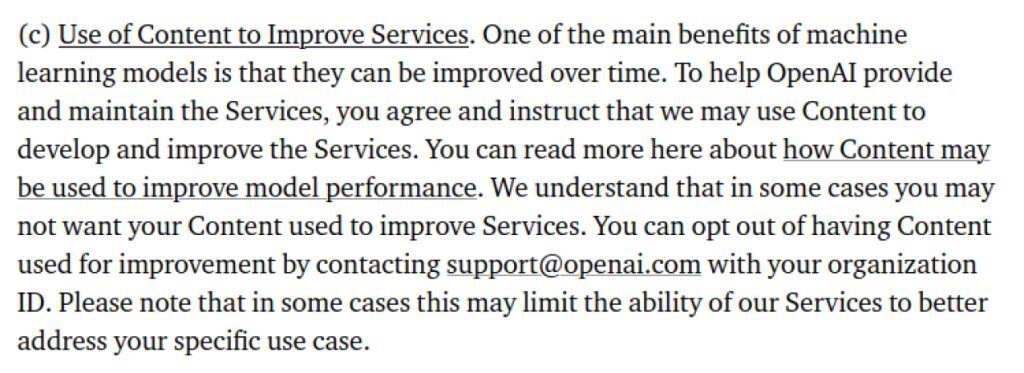

OpenAI’s terms of use allow the use of your content to improve/train their service. They do allow you to opt out but this may lead to a degraded level of service.

If you’re uncomfortable with the thought of handing your IP over to service providers (or accepting a worse level of service by opting out), developing your own models or working with an AI implementation partner to do so may be a worthwhile option. Many of the benefits of LLMs like ChatGPT are available in much smaller models that can be trained in-house or by your partner for a specific use case. If you are working with an AI partner, make sure that their IP policy allows you to retain ownership of your training data and model.

Alternatively, if you are comfortable with sharing your IP, you should still take steps to ensure that you don’t get locked in by your vendor. One major benefit of LLM technologies is that the protocols used for system-to-system communication are often very simple. After all, with these types of models text goes in and more text comes back out. Therefore you should be able to loosely couple any interactions between your internal IT systems and external APIs such that you could easily switch provider if the commercial terms you’re offered at renewal time are not satisfactory.

Conclusion

ChatGPT is an exciting development and signals a step-change in the maturity and utility of natural language processing AI models that is impossible to miss. However, it’s important to be aware of and have an opinion about the hosting, operation, auditability and ownership of AI models.

So what would my organisation’s ChatGPT strategy be? I’d say using it as a flashy vehicle to get organisational buy-in for AI technologies in general and developing our own models that we control and own.